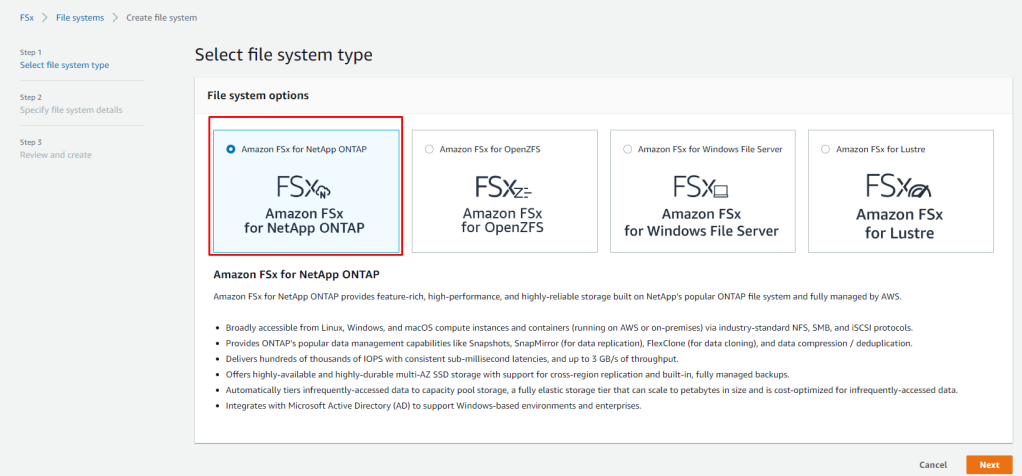

Amazon FSx for NetApp ONTAP provides fully managed shared storage in the AWS Cloud with the popular data access and management capabilities of ONTAP and this blog post we are going to use these volumes mount as Persistent Volumes on Tanzu Kubernetes Clusters running on VMware Cloud on AWS

With Amazon FSx for NetApp ONTAP, you pay only for the resources you use. There are no minimum fees or set-up charges. There are five Amazon FSx for NetApp ONTAP components to consider when storing and managing your data: SSD storage, SSD IOPS, capacity pool usage, throughput capacity, and backups.

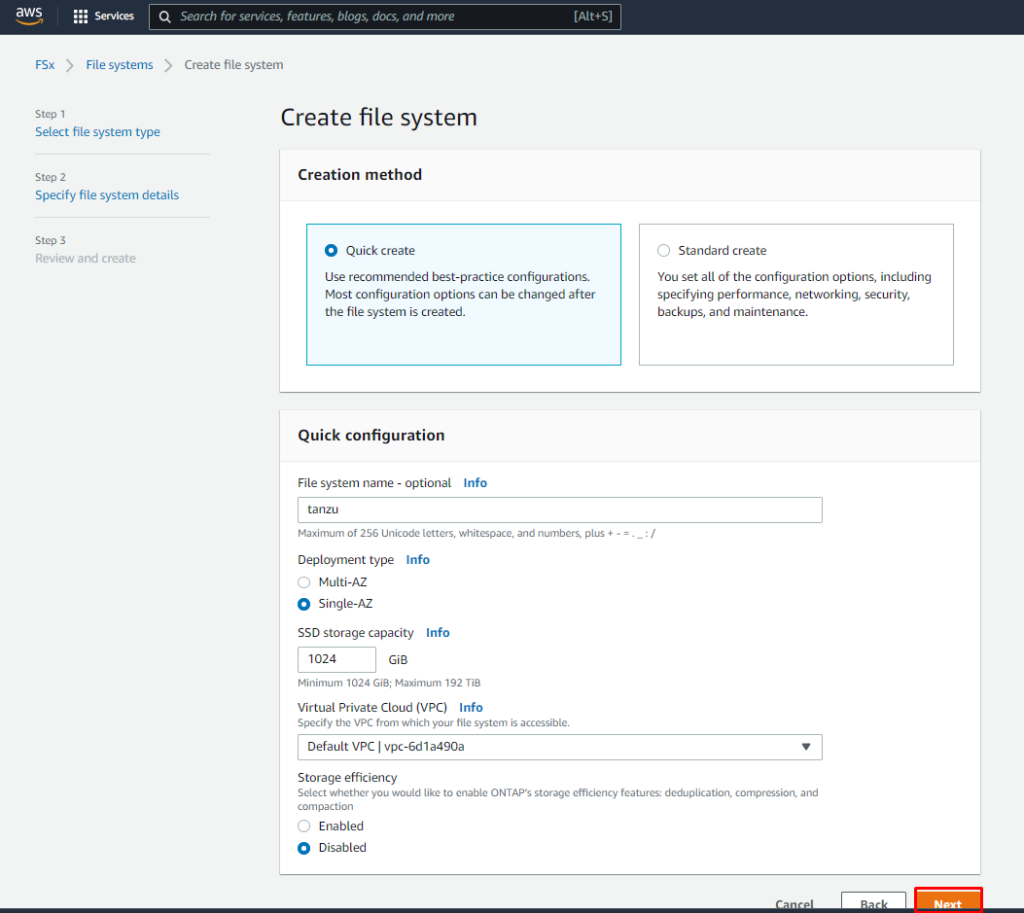

The Amazon FSx console has two options for creating a file system – Quick create option and Standard create option. To rapidly and easily create an Amazon FSx for NetApp ONTAP file system with the service recommended configuration, I use the Quick create option.

The Quick create option creates a file system with a single storage virtual machine (SVM) and one volume. The Quick create option configures this file system to allow data access from Linux instances over the Network File System (NFS) protocol.

In the Quick configuration section, for File system name – optional, enter a name for your file system.

For Deployment type choose Multi-AZ or Single-AZ.

- Multi-AZ file systems replicate your data and support failover across multiple Availablity Zones in the same AWS Region.

- Single-AZ file systems replicate your data and offer automatic failover within a single Availability Zone, for this post i am creating in Single AZ

- SSD storage capacity, specify the storage capacity of your file system, in gibibytes (GiBs). Enter any whole number in the range of 1,024–196,608.

- For Virtual Private Cloud (VPC), choose the Amazon VPC that is associate with your VMware Cloud on AWS SDDC.

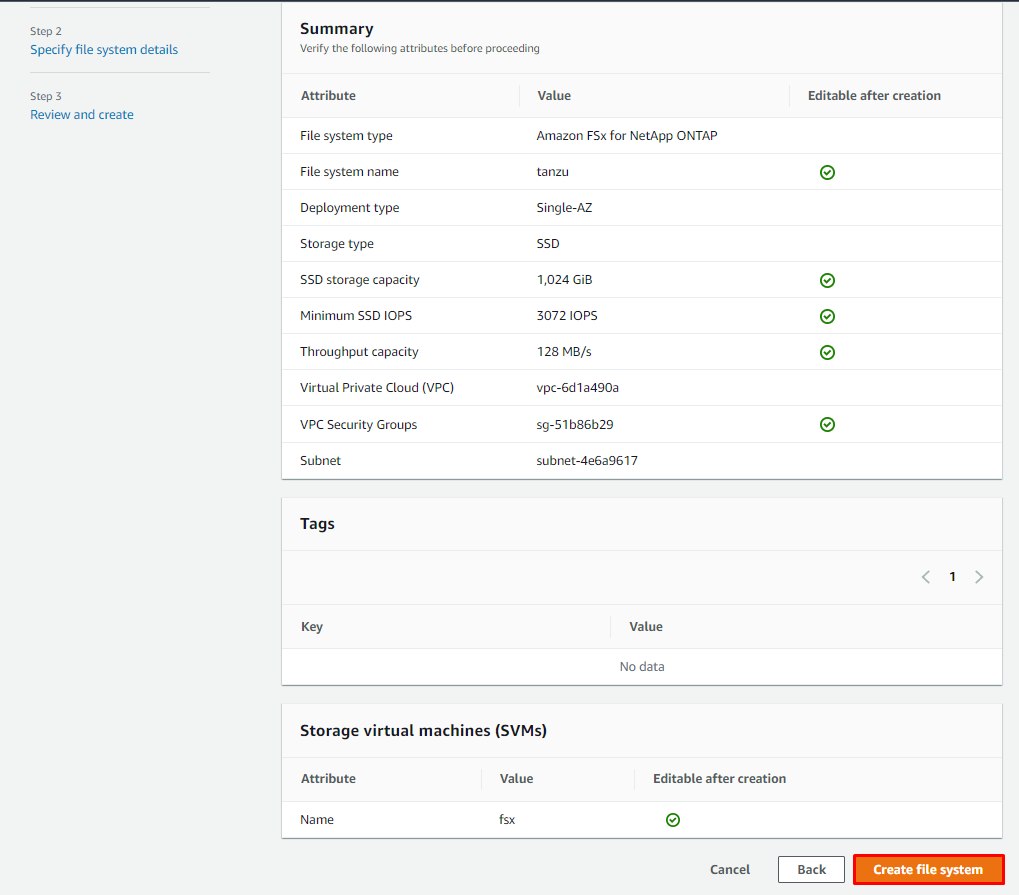

Review the file system configuration shown on the Create ONTAP file system page. For your reference, note which file system settings you can modify after the file system is created.

Choose Create file system.

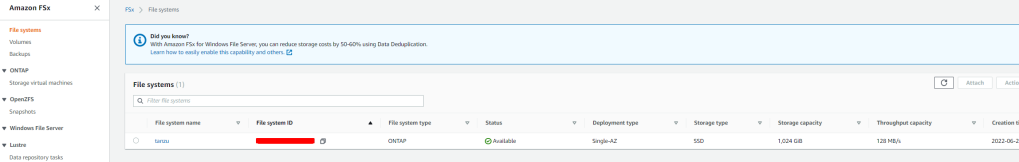

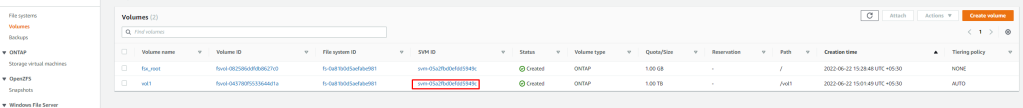

Quick create creates a file system with one SVM (named fsx) and one volume (named vol1). The volume has a junction path of /vol1 and a capacity pool tiering policy of Auto.

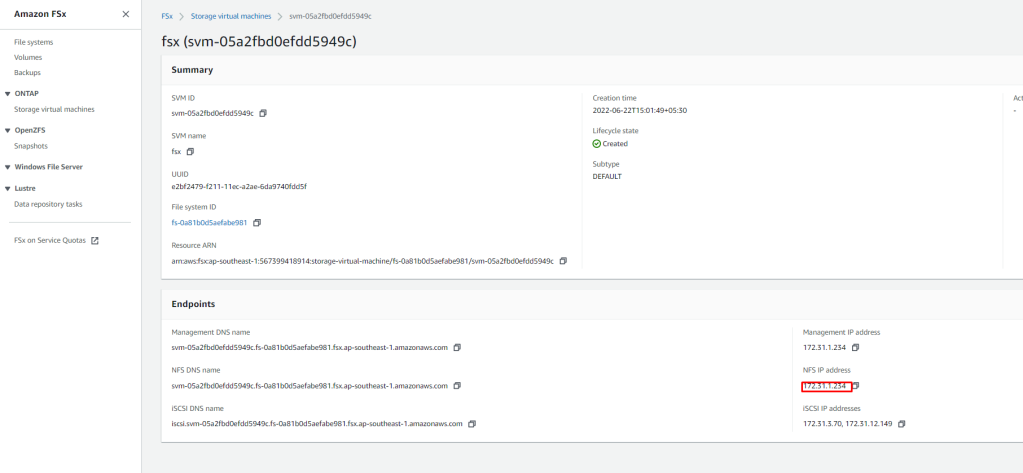

For us to use this SVM, we need to get the IP address of SVM for NFS , Click on SVM ID and take a note of this IP, we will use this IP in our NFS configurations for Tanzu.

Kubernetes NFS-Client Provisioner

NFS subdir external provisioner is an automatic provisioner that use your existing and already configured NFS server to support dynamic provisioning of Kubernetes Persistent Volumes via Persistent Volume Claims. Persistent volumes are provisioned as ${namespace}-${pvcName}-${pvName}.

More Details – Explained here in detail https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

I am deploying this on my Tanzu Kubernetes cluster which is deployed on VMware Cloud on AWS.

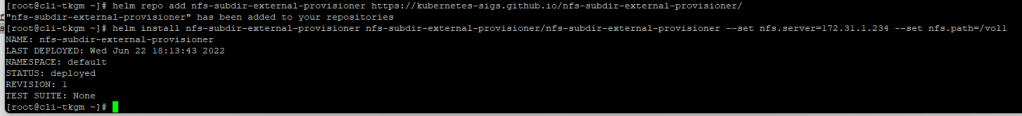

- Add the helm repo –

#helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

- Install using as below:

#helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \

--set nfs.server=<IP address of Service> \

--set nfs.path=/<Volume Name>

#My command will be like this#

#helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner \

--set nfs.server=172.31.1.234 \

--set nfs.path=/vol1

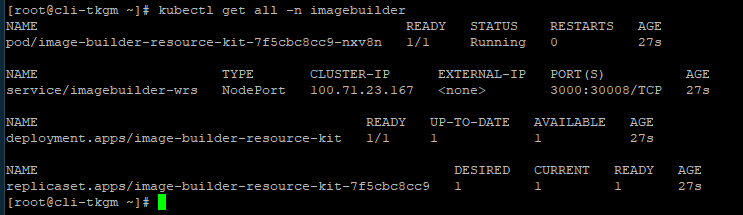

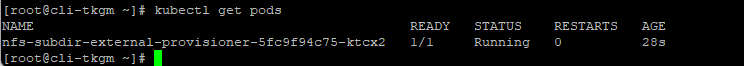

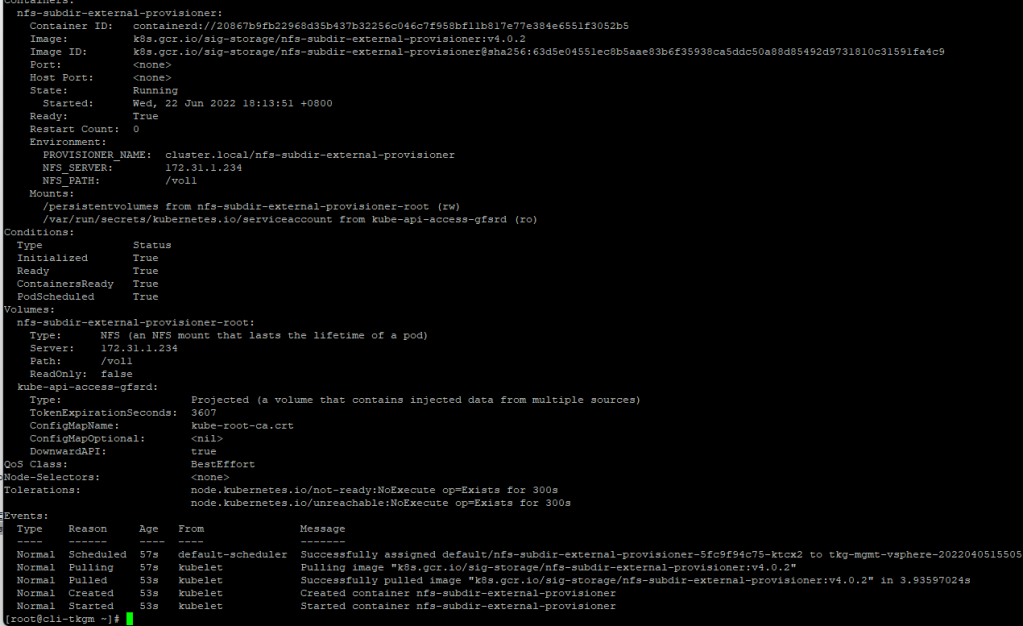

Post installation of chart, you can check the status of Pod, it is not in running state then describe and see where it stuck

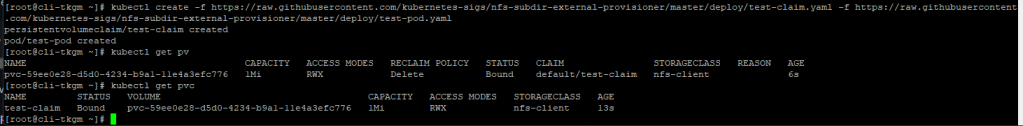

Finally, Test Your Environment!

Now we’ll test your NFS subdir external provisioner by creating a persistent volume claim and a pod that writes a test file to the volume. This will make sure that the provisioner is provisioning and that the Amazon FSx for NetApp ONTAP service is reachable and writable.

As you can see deployed application created an PV and PVC successfully on Amazon FSx for NetApp ONTAP

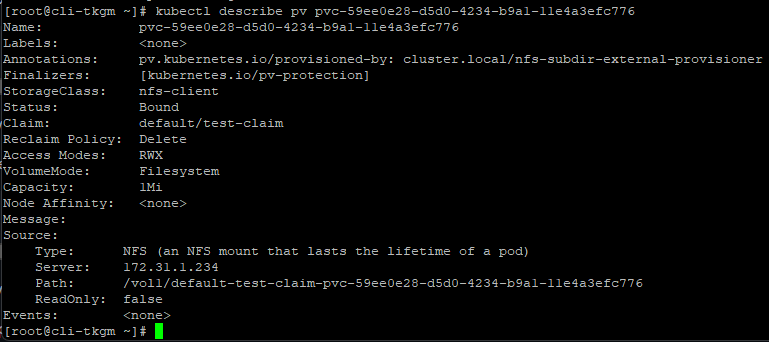

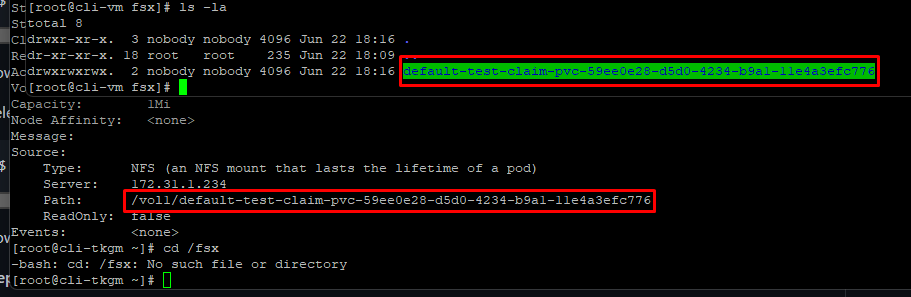

Describe the Persistent Volume to see the source of it, as you can see below it is created on NFS running on SVM having IP – 172.31.1.234

This is the power of VMware Cloud on AWS and AWS native services, customers can use any AWS native service without worrying about egress charges as well as security as everything is being configured and accessed over the private connections.