VMware Tanzu Kubernetes Grid (TKG) is a multi-cloud Kubernetes footprint that customers/partners can run both on-premises in vSphere, VMware Cloud on AWS and the public cloud on Amazon EC2 and Microsoft Azure VMs.

TKG provides a Container orchestration through Kubernetes is now built into the vSphere 7 platform.As a VMware Cloud on AWS customer you can take advantage of this new functionality to build Kubernetes clusters in the same platform you’ve grown accustomed to using to manage your virtual infrastructure.

Take control of Cloud Resources and give freedom to Developers based on Personas

Virtualization Administrator: They will be able to define resource allocations and permissions for your users to create their own Kubernetes clusters according to their own specifications.Define access policies, storage policies, memory and CPU restrictions for teams needing Kubernetes access.

Developer or Platform Administrator: They can create new Kubernetes clusters within the defined access policies, upgrade those clusters and scale clusters within the approved resource allocations.

VMware recognizes that not all environments are running on top of vSphere. Tanzu Kubernetes Grid(TKG) leverages the same ClusterAPI engine as VMware Tanzu to manage cluster lifecycles, and can run on any infrastructure. VMware provides three variants of the TKG:

- Tanzu Kubernetes Grid Multi-Cloud (TKGm): Installer driven wizard to set up Kubernetes environment to run across multi clouds for example: on AWS EC2 or Azure Native VMs

- Tanzu Kubernetes grid Service (TKGS) aka vSphere With Tanzu: Natively integrated with vSphere7+ and available to customers at no extra cost for basic version on VCF on-prem as well as VMware Cloud on AWS

- Tanzu Kubernetes Grid Integrated Edition: VMware Tanzu Kubernetes Grid Integrated Edition (formerly known as VMware Enterprise PKS) is a Kubernetes-based container solution with advanced networking, a private container registry, and life cycle management.

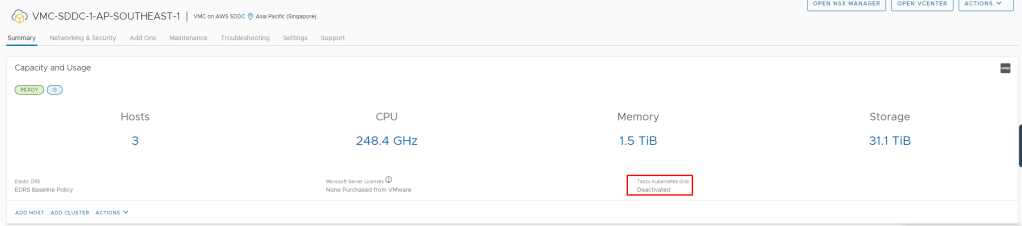

Enable Tanzu Service on VMware Cloud on AWS

Pre-requisite:

- Make sure we have at-least three node SDDC is deployed and running with enough available resources (at least 112 GB of available memory, and has sufficient free resources to support 16 vCPUs)

- Get Three CIDR blocks for the deployment. These three needs to be ranges that does not overlap with the Management CIDR or any other networks used on-prem or in the VMware Cloud on AWS SDDC.

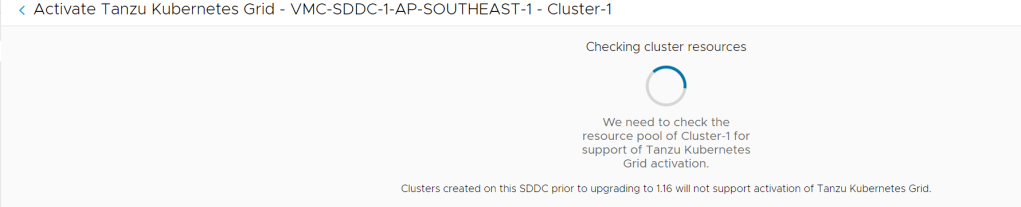

- You can activate Tanzu Kubernetes Grid in any SDDC at version 1.16 and later.

- If Edge cluster has been configured with medium configuration, then a SDDC cluster requires a minimum of three hosts for activation.

- If Edge cluster has been configured with Large configuration, then a SDDC cluster requires a minimum of four hosts for activation.

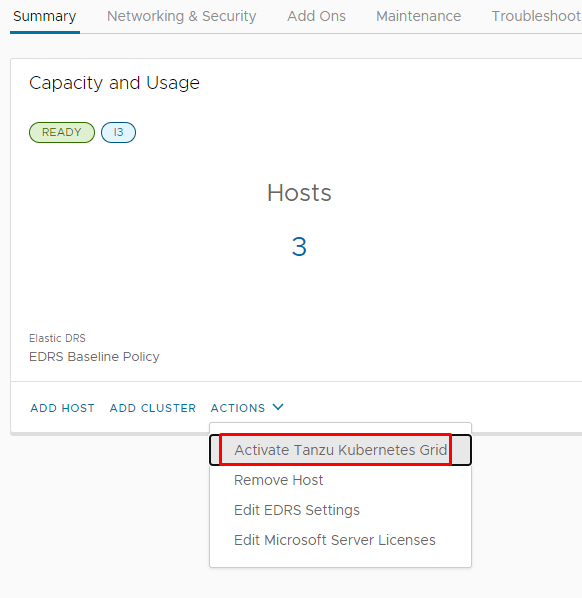

Once pre-requisites are ready, go to VMware Cloud on AWS SDDC and click on “Activate the Tanzu Kubernetes Service”

Activation process will check required resources and will only move ahead if you have pre-requisite completed.

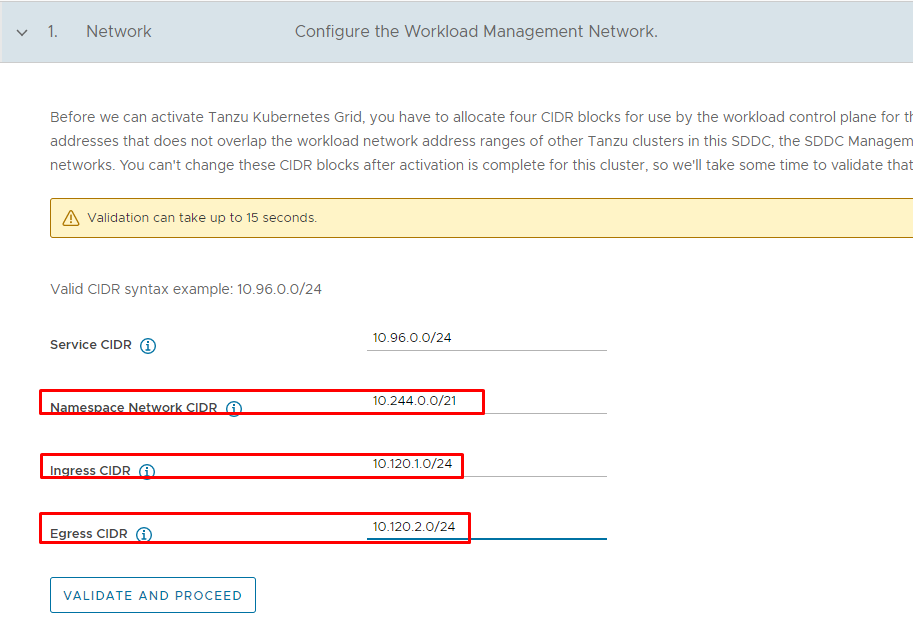

on the next screen:

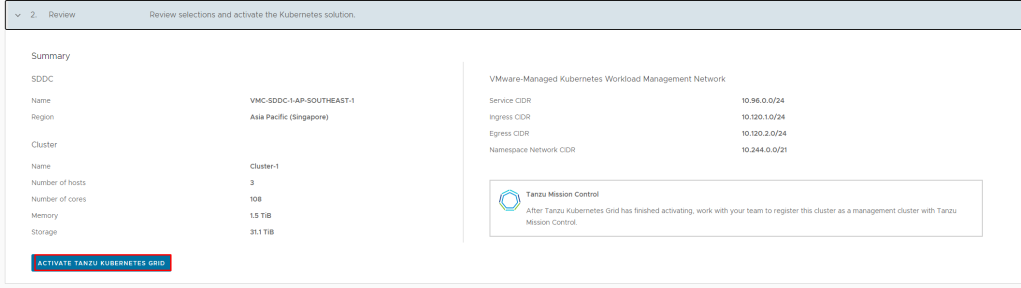

- Leave the Service CIDR as default or pick of your choice but non-overlapping and used for Tanzu supervisor services for the cluster

- Enter the “namespace Network CIDR”, non-overlapping

- Enter an ‘Ingress CIDR”, non-overlapping

- Enter an “Egress CIDR”, non-overlapping

- next Click on “Validate and Proceed”

NOTE: CIDR blocks of size 16, 20, 23, or 26 are supported, and must be in one of the “private address space” blocks defined by RFC 1918 (10.0.0.0/8, 172.16.0.0/12, or 192.168.0.0/16).

and finally once validation is done, click on Activate Tanzu Kubernetes Grid

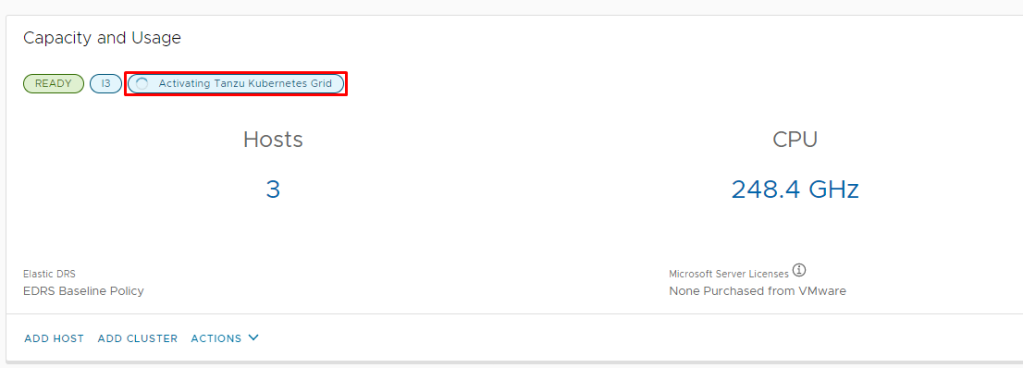

this will start activation process and you should be seeing “Activating Tanzu Kubernetes Grid” on your SDDC tile.This process should get completed within 20-30 minutes.

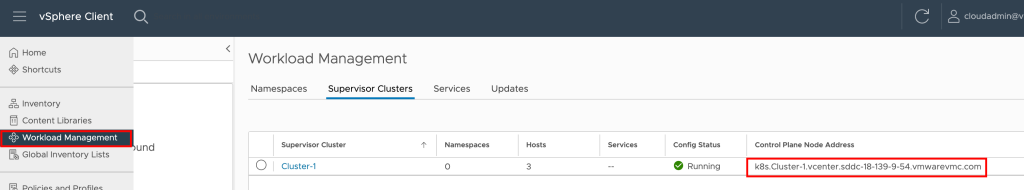

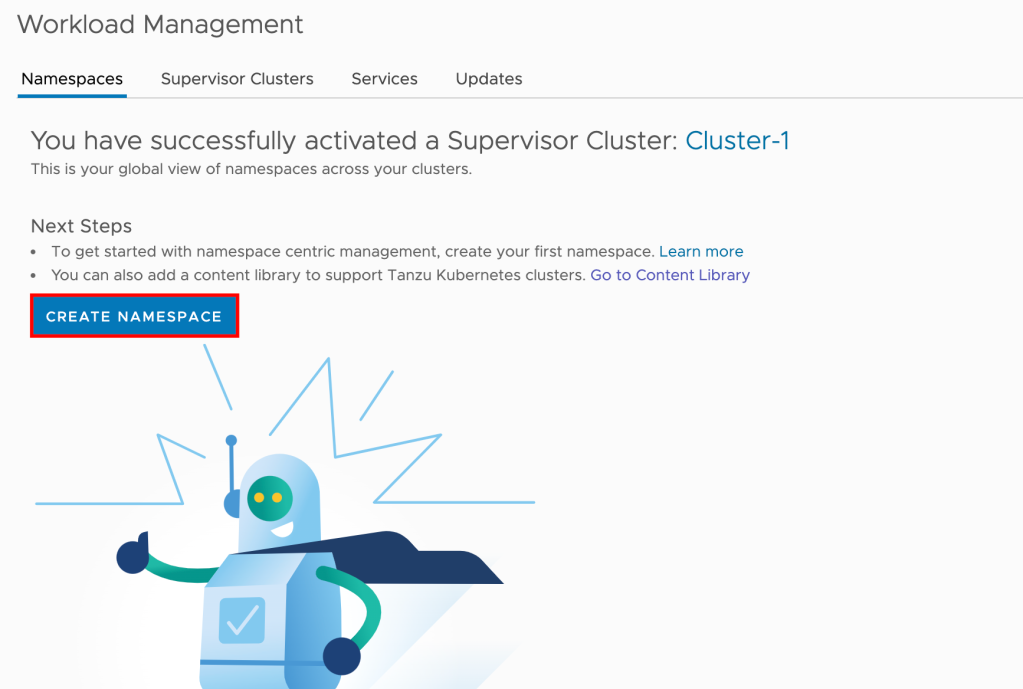

Such an easy process to make your SDDC enabled for running VMs and Containers together. When activation is completed, login to SDDC vCenter and click on Workload Management

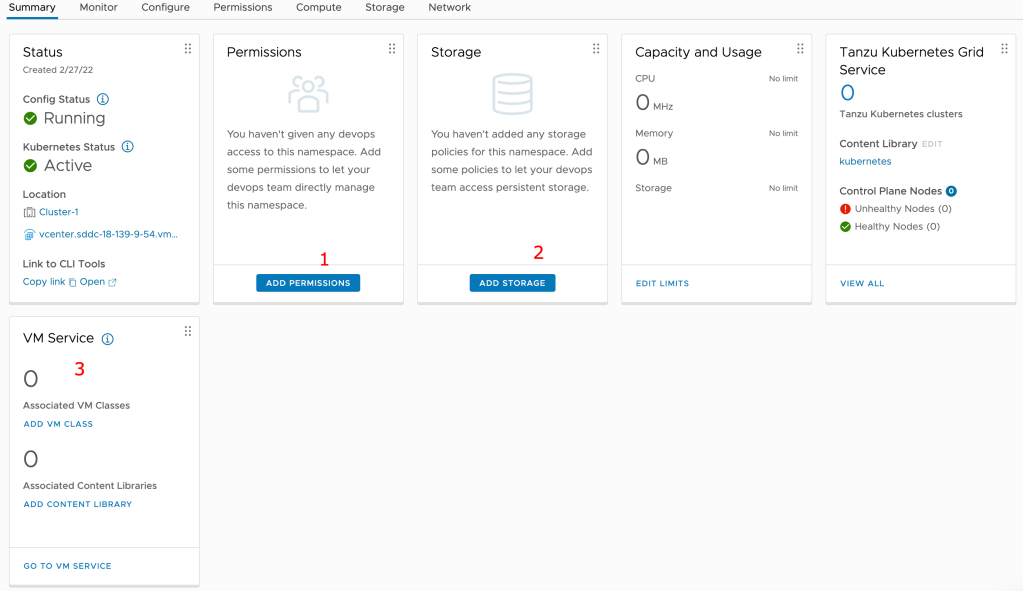

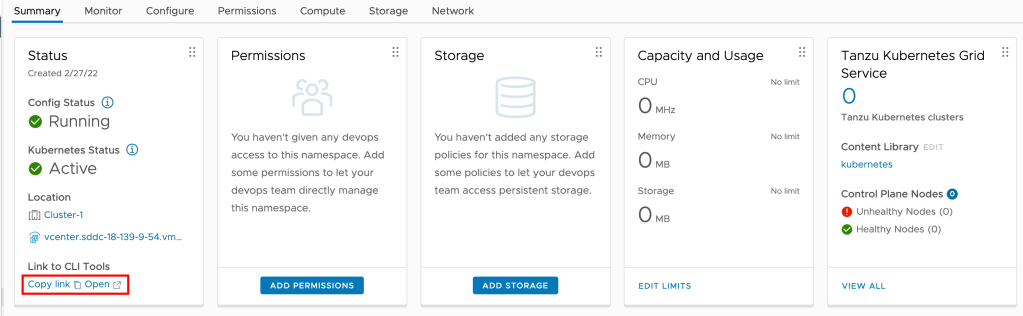

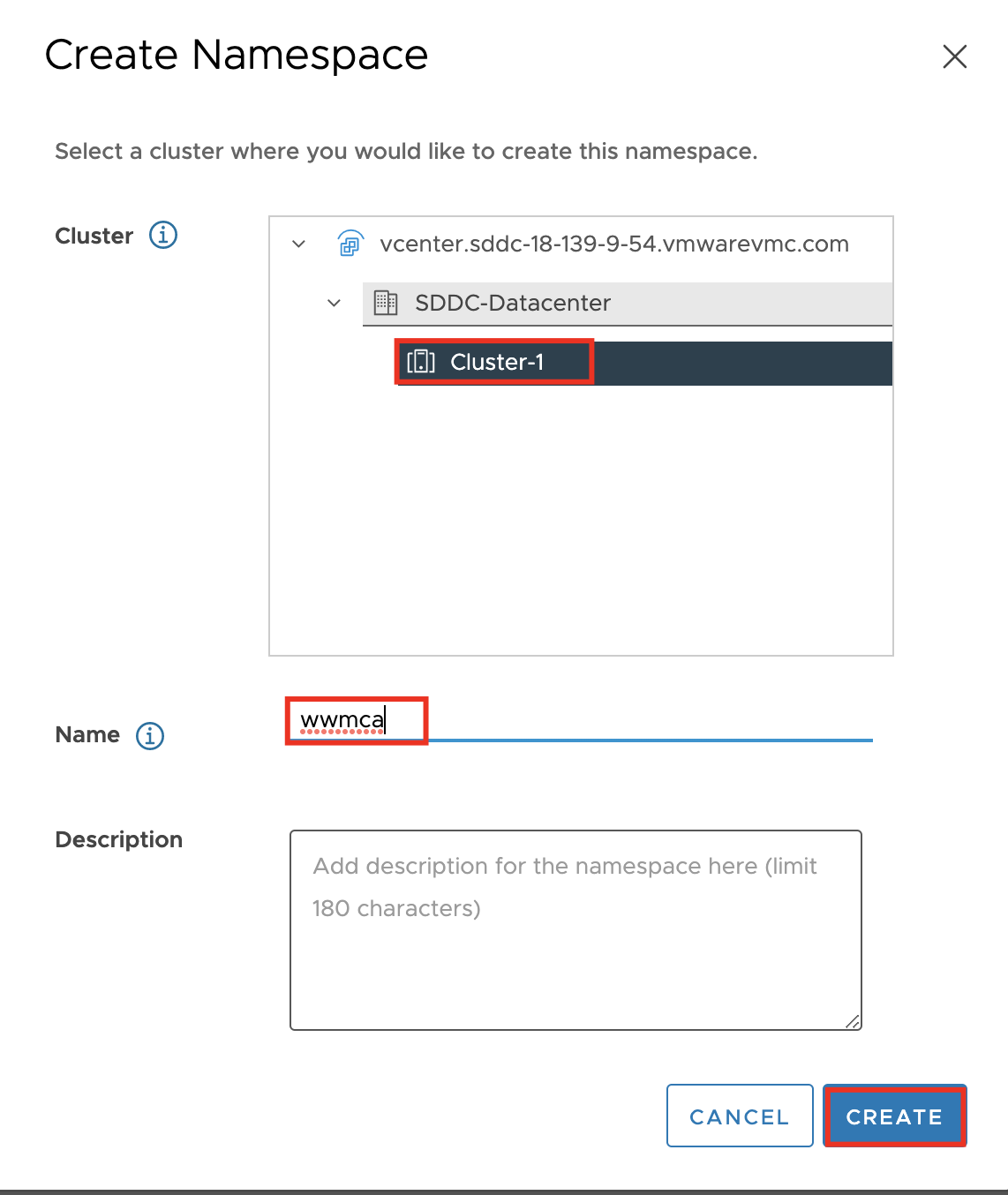

Persona (Virtualization/vSphere Administrator) – vSphere Administrator create a vSphere Namespace on the Supervisor Cluster, sets resource limits to the namespace and permissions so that DevOps engineers can access it. he/she provide the URL of the Kubernetes control plane to DevOps engineers where they can run create their own Kubernetes clusters and run their workloads.

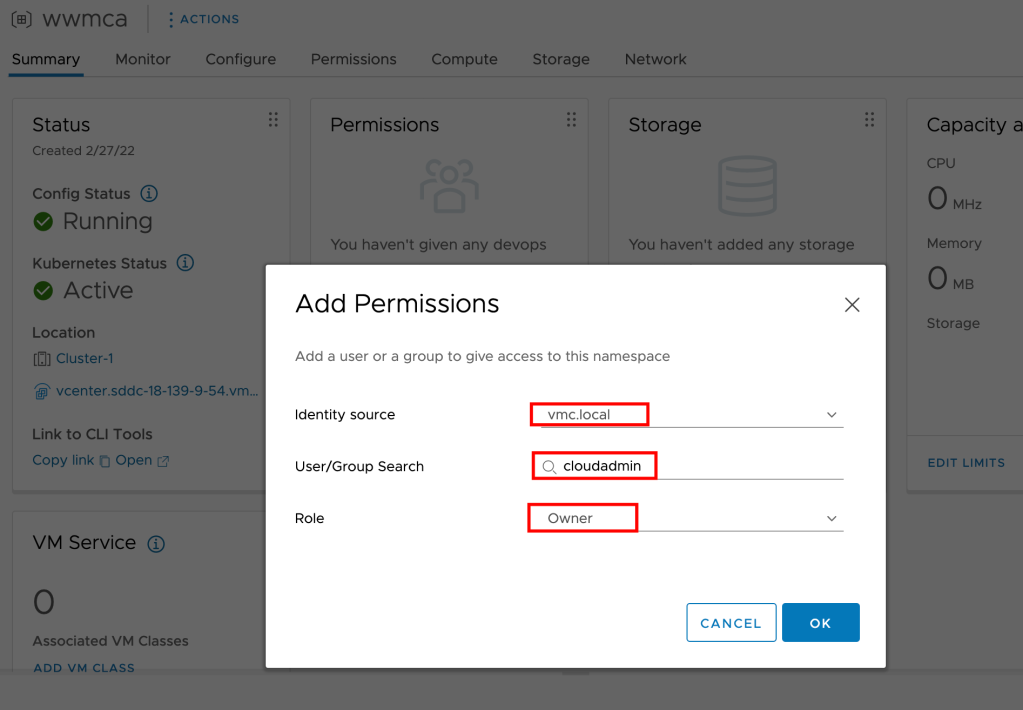

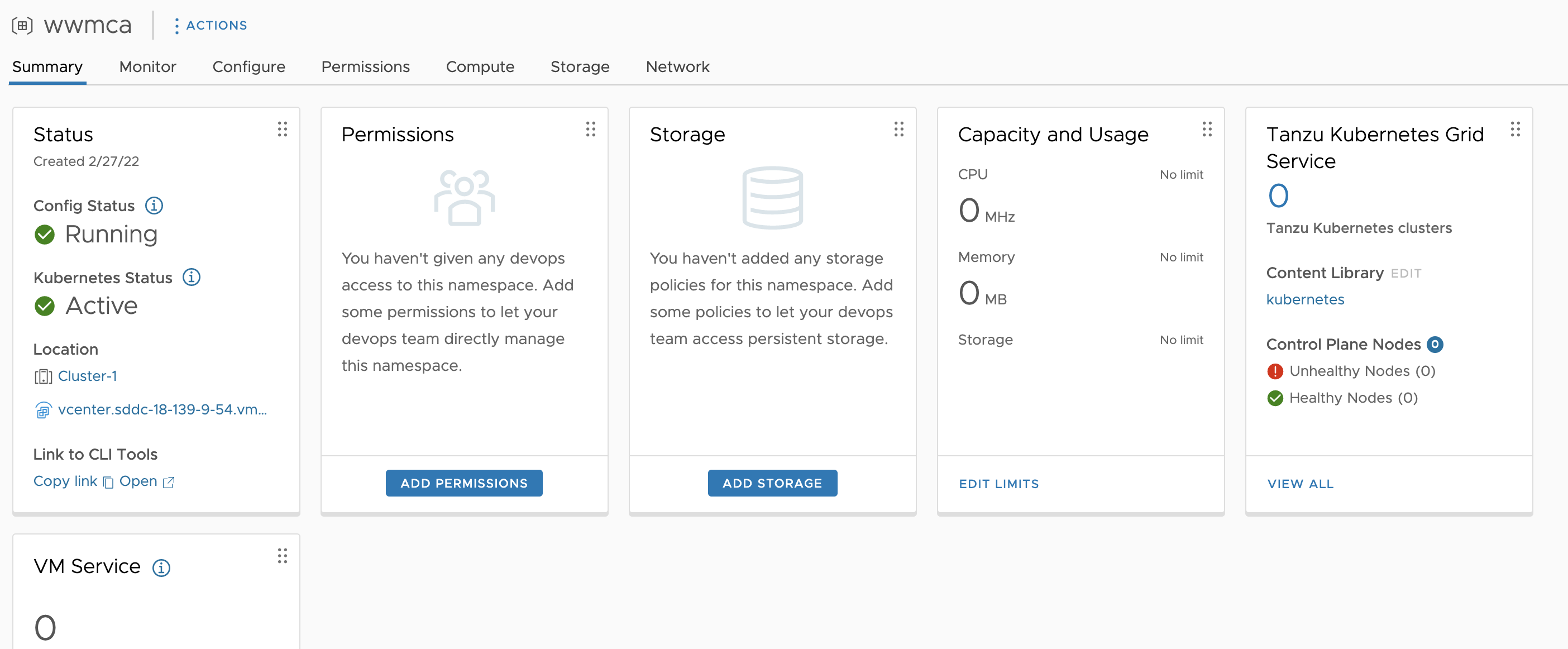

Step -1: Set permissions so that DevOps engineers can access the namespace.

From the Permissions pane, select Add Permissions.

Select an identity source, a user or a group, and a role, and click OK.

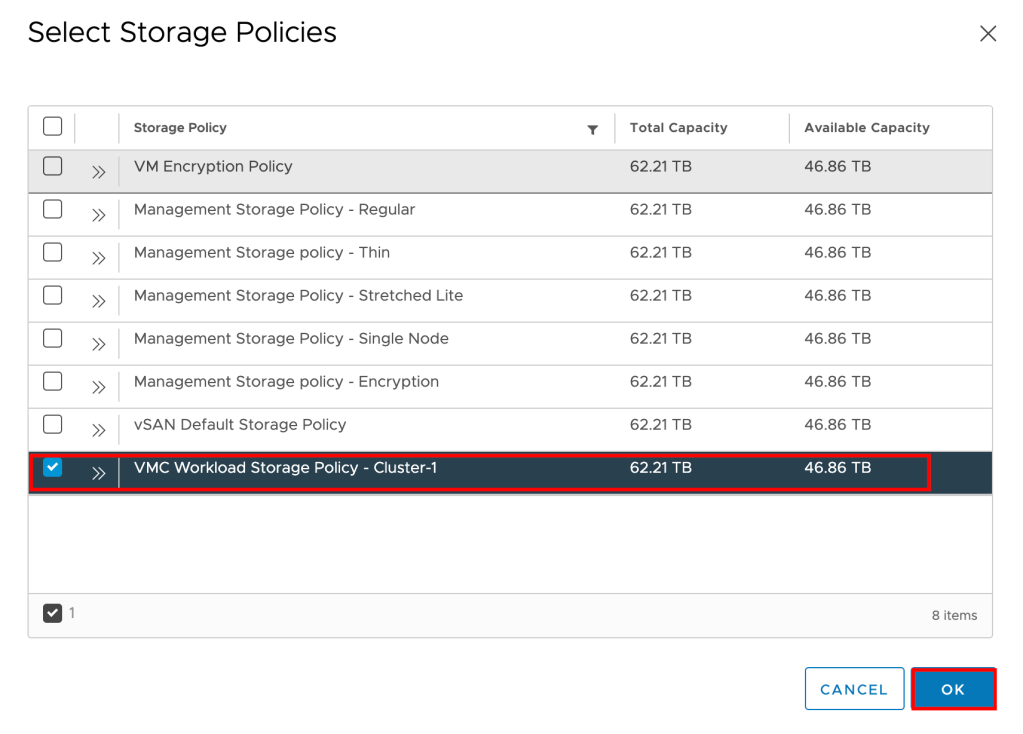

Step-2: Set persistent storage to the namespace.Storage policies that you assign to the namespace control how persistent volumes and Tanzu Kubernetes cluster nodes are placed within datastores in the SDDC environment.

From the Storage pane, select Add Storage.

Select a storage policy to control datastore placement of persistent volumes and click OK

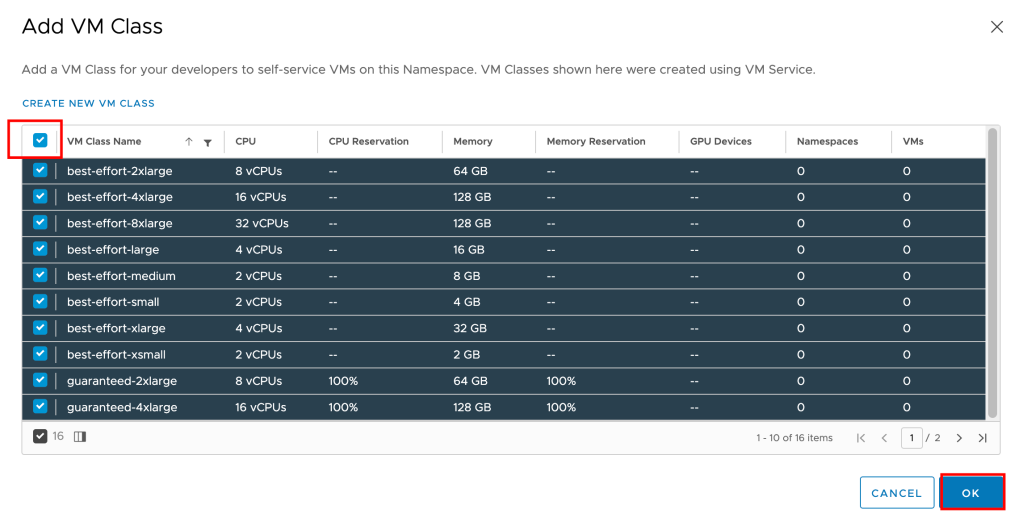

The VM class is a VM specification that can be used to request a set of resources for a VM. The VM class is controlled and managed by a vSphere administrator, and defines such parameters as the number of virtual CPUs, memory capacity, and reservation settings. The defined parameters are backed and guaranteed by the underlying infrastructure resources of a Supervisor Cluster.

Workload Management offers several default VM classes. Generally, each default class type comes in two editions: guaranteed and best effort. A guaranteed edition fully reserves resources that a VM specification requests. A best effort class edition does not and allows resources to be overcommitted. Typically, a guaranteed type is used in a production environment.

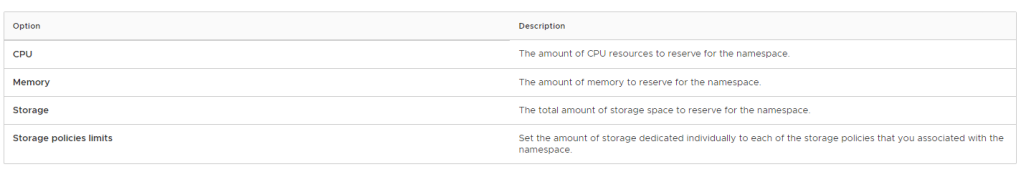

vSphere Administrator can setup additional limits based on use cases and requirements.

Copy NameSpace URL by clicking on “Copy link” and give it to your DevOps/Platfrom admin)

Persona (DevOps/Platform Administrator)

How to Access and Work ?

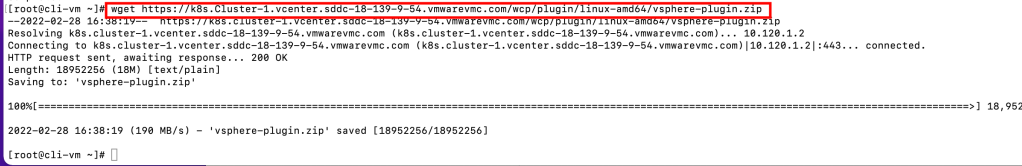

Install a new VM (clientvm) or from their desktop/laptop, he/she can access this newly created “Namespace” and then create new Kubernetes cluster. When the new VM is provisioned, power it on and and ssh to it and Download the command line tools from vCenter, make sure the item below in red box is changed to your supervisor cluster address that you copied earlier by running:

#wget https://k8s.Cluster-1.vcenter.sddc-18-139-9-54.vmwarevmc.com/wcp/plugin/linux-amd64/vsphere-plugin.zip

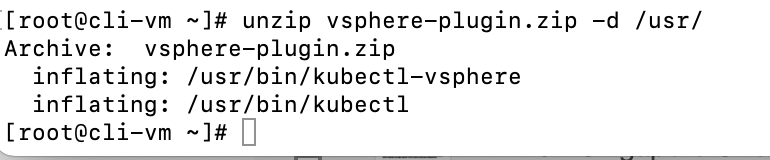

Unzip using below command

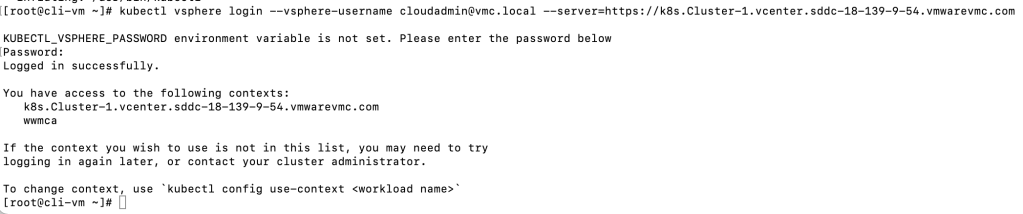

Now lets login to the supervisor cluster by entering the following :

kubectl vsphere login --vsphere-username cloudadmin@vmc.local --server=https://k8s.Cluster-1.vcenter.sddc-18-139-9-54.vmwarevmc.comenter the password for cloudadmin or any other user to complete the login

From here onwards, Devops can create their own K8s clusters and deploy applications, they can also utlize VMware’s multi-cloud mamagement platfrom to spin up kubernetes clusters using GUI.

For Devops to use GUI, vSphere Administrator need to Register VMware Cloud on AWS management cluster with Tanzu Mission Control. lets do that:

Register This Management Cluster with Tanzu Mission Control

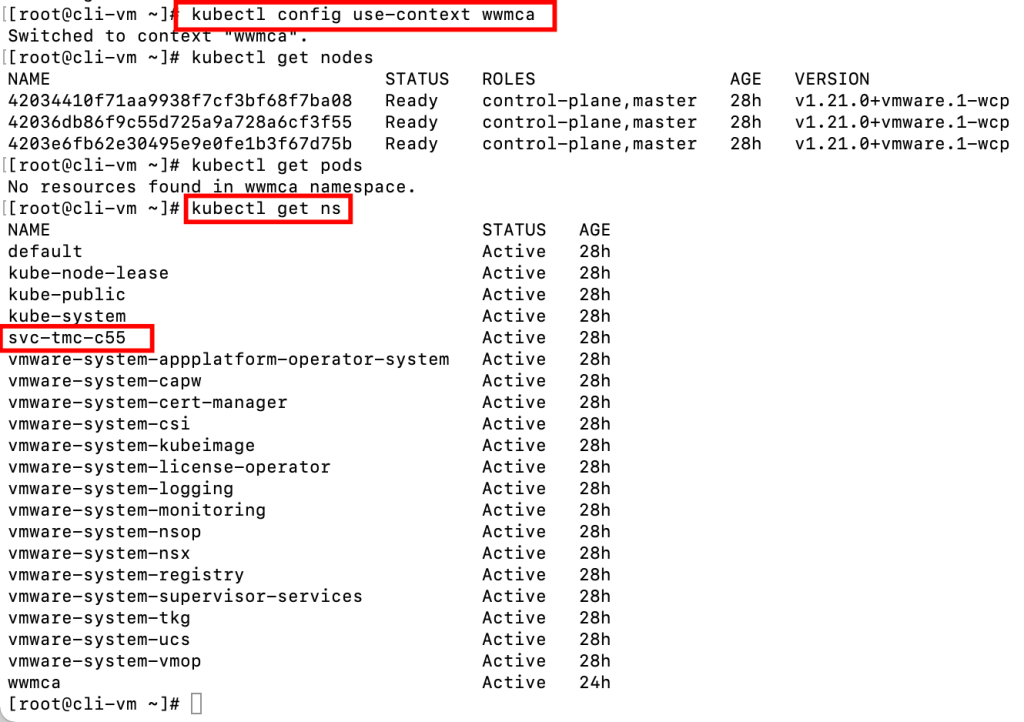

Tanzu service ships with a namespace for Tanzu Mission Control. This namespace exists on the Supervisor Cluster where you install the Tanzu Mission Control agent.

The vSphere Namespace provided for Tanzu Mission Control is identified as svc-tmc-cXX

To integrate the Tanzu Kubernetes Grid Service with Tanzu Mission Control, install the agent on the Supervisor Cluster.

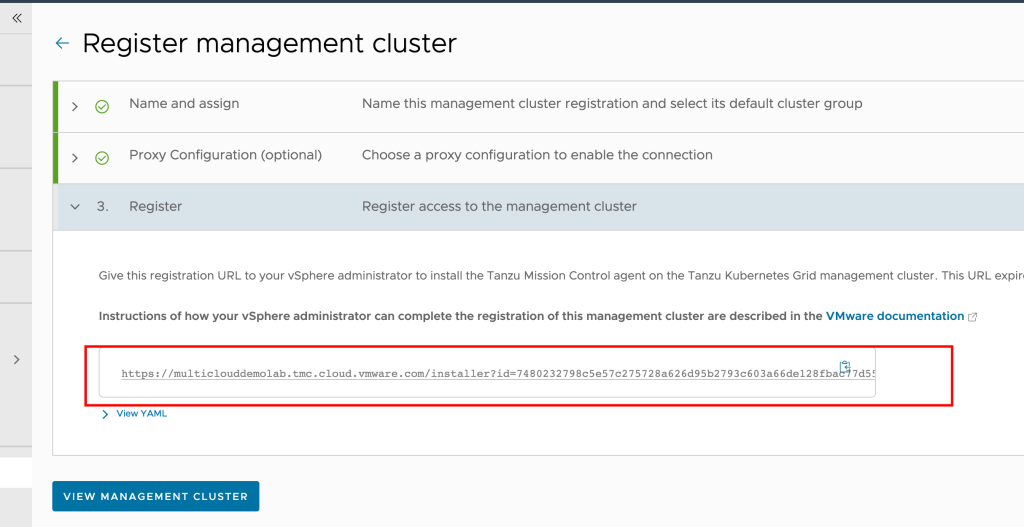

Register the Supervisor Cluster with Tanzu Mission Control and obtain the Registration URL. See Register a Management Cluster with Tanzu Mission Control.

On the client-vm, create a .yaml file with below content:

apiVersion: installers.tmc.cloud.vmware.com/v1alpha1

kind: AgentInstall

metadata:

name: tmc-agent-installer-config

namespace: <NAMESPACE captured in above step>

spec:

operation: INSTALL

registrationLink: <TMC-REGISTRATION-URL captured from TMC console>

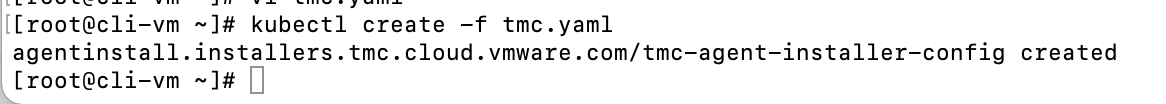

Run this yaml file on using:

#kubectl create -f tmc.yaml

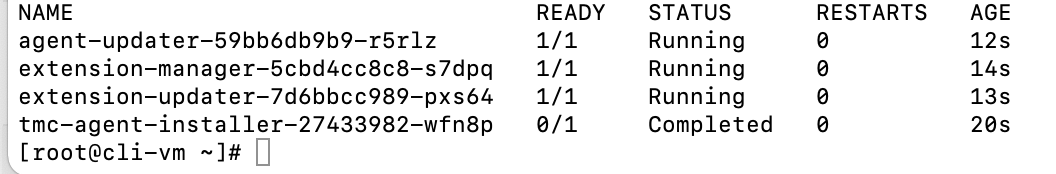

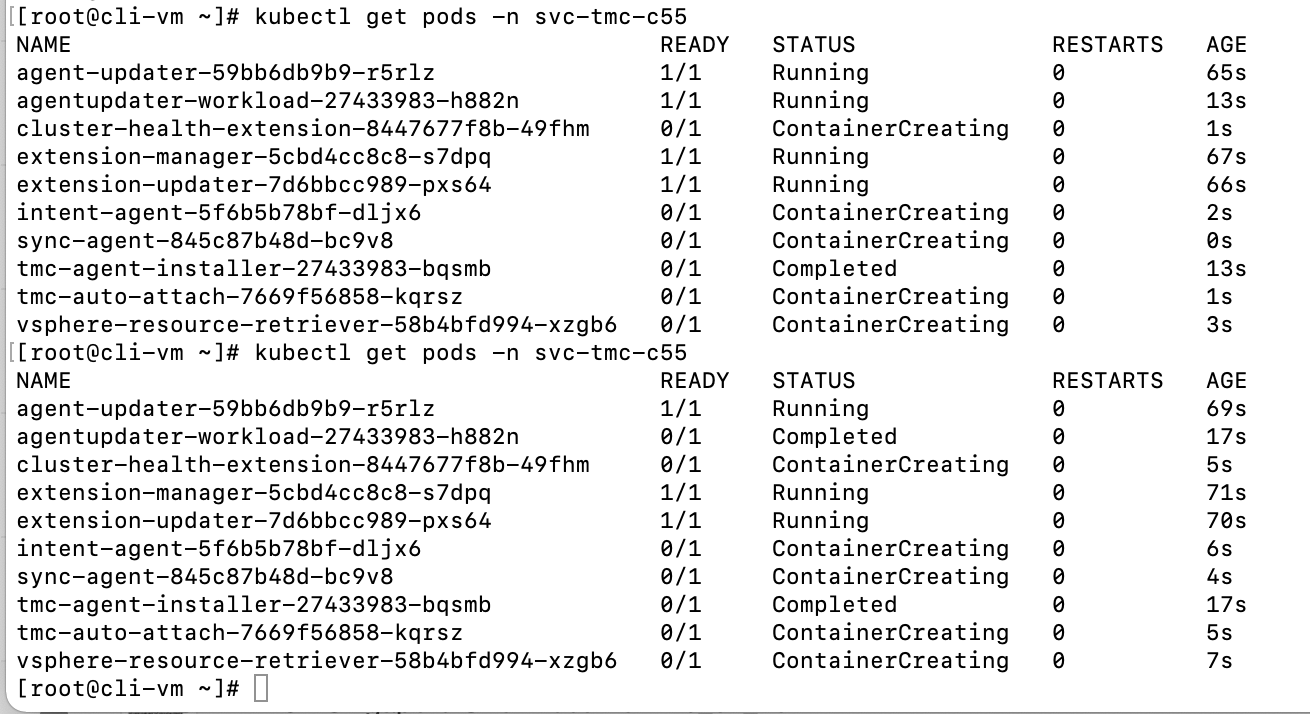

you can also check the status of TMC registration by running below command:

#kubectl get pods -n <ns name>

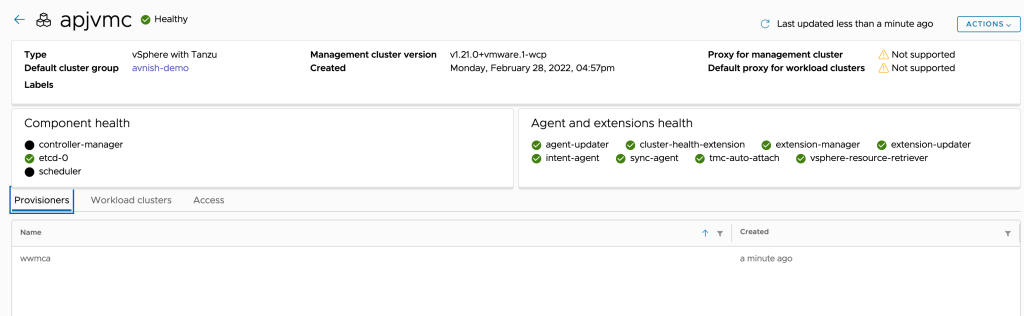

Now go back to Tanzu Mission Control and after some time you should see your Supervisor cluster ready

Devops/Platform admins are now ready to deploy your TKC clustes as well they can deploy containers, this completes this part of blog, in the next part i will write how to create TKC clusters, run applications within containers and how to expose to internet.

Pingback: Tanzu Service on VMware Cloud on AWS – Kubernetes Cluster Operations | VMTECHIE